It’s Ada Lovelace‘s 197th birthday today and the perfect day for my last post about Babbage‘s computing engines. This one is about the famous first program for calculating the Bernoulli series which appeared in Note G of her notes on Babbage’s analytical engine. One of the odd things about this program is that it’s widely known and recognized as an important milestone – but as far as I can determine not widely understood. I haven’t found even one description of what it does and how it does it, except for the original note.

It’s Ada Lovelace‘s 197th birthday today and the perfect day for my last post about Babbage‘s computing engines. This one is about the famous first program for calculating the Bernoulli series which appeared in Note G of her notes on Babbage’s analytical engine. One of the odd things about this program is that it’s widely known and recognized as an important milestone – but as far as I can determine not widely understood. I haven’t found even one description of what it does and how it does it, except for the original note.

In this post I’ll give such a description. It has roughly three parts. First I’ll give a quick context – what is Note G, that kind of thing. Then I’ll derive the mathematical rules the program is based on. I’ll roughly follow the same path as Note G but I’ll include a lot more steps which should make it easier to digest. Note G is very concise and leaves a lot of steps to the reader and the derivation is quite clever so some of those steps can be tricky. (You can also just skip that part altogether and take the result at the end as given.)

In the last part I’ll write up a modern-style program in C that does the same as the Note G program and then break it down to get the program itself. Finally I’ll do what you might call a code review of the program.

Okay, let’s get started.

Background

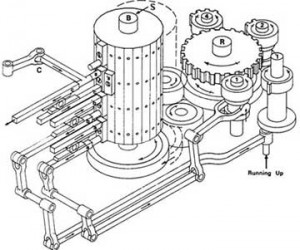

I won’t go into too many general details about Babbage, Ada Lovelace, or the analytical engine. There’s plenty of resources on them and their history on the web, including other posts I’ve written about the difference engine (what motivated it, how did it work) and programming the analytical engine (table code, microcode, and punched cards).

In 1840, after Babbage had been working on the analytical engine for a while, he went to Turin and discussed his ideas with a group of engineers and mathematicians. Based on those conversations one of them, Luigi Menabrea, published an article about the engine in French in 1842. Soon after that Ada Lovelace translated the article into English and Babbage encouraged her to write an original article. She did – sort of – but rather than write a separate article she did it in the form of notes to her translation of Menabrea’s article. Just to give an idea of how much she added, her translation with notes, published in 1843, was three times as long as the original. One of the notes, Note G (they were named from A to G), presented the program I’ll describe in this post.

We’ll get to that very soon but first a few words about what it calculates, the Bernoulli series.

Bernoulli

This is the first few values of the Bernoulli series:

| Name | B0 | B1 | B2 | B3 | B4 | B5 | B6 | B7 | … |

| Value | 1 | -1/2 | 1/6 | 0 | -1/30 | 0 | 1/42 | 0 | … |

It’s one of those mathematical objects, like e and π, that keep appearing in different places and seem to have a special significance within the structure of mathematics. One place it appears is in Taylor expansions of exponential and trigonometric functions – for instance, it holds that

The Bernoulli series is not that difficult to compute but it’s not trivial either. If you want to demonstrate the power of a computing engine it’s not a bad choice.

Part of Note G is concerned with deriving a way to calculate the series and that’s what we’ll start with, using the formula above in combination with a second identity, one you’re probably already familiar with, the Taylor expansion of ex:

If we plug this into the left-hand side of the previous equation in place of we get

This means that the original equation can also be written as

Multiplying the denominator from the left-hand side onto both sides we get this identity:

This looks like we’ve gone from bad to worse, right? Both series on the right are infinite and we have an inconvenient variable x stuck in there which we need to get rid of. This is where the cleverness comes in.

We’re not going to multiply these two series together, but if we were to do it we know what the result would look like. It would be a new series in x of the form:

The 1 on the left-hand side even tells us what those coefficients are going to be: c0 is going to be 1 and all the remaining cis are going to be 0. And even though the full product will be infinite, the individual coefficients are all nice and finite; those we can derive. Here is the first one, which we know will be 1:

The only unknown here is B0 and if we solve for it we get that it’s 1. Let’s try the next one:

Since we now know what B0 is B1 is the only unknown; solving for it gives us -1/2. One more time,

Solving for B2 gives us 1/6.

In general, if we know the first k-1 Bernoulli numbers we can now calculate the k‘th by solving this:

This only gets us part of the way though, it needs to be cleaned and simplified before we can code it. (And again, if you find yourself getting bored feel free to skip to the next section where we start coding).

The first simplification is to solve Bk up front. As you can see above the term we’re interested in is always the last one and has the form

This we can solve for in the original equation:

This simplifies the process of calculating Bk, we now just have to plug the previous values into this equation to get the next one.

We’ve already computed the first few values of the series so we can calculate the first two terms up front:

Pluggin them back into the formula we get:

As the table of Bernoulli values at the beginning suggested all the numbers at odd indexes greater than 1, B3, B5, etc., are zero. And since we’ve already handled 1 as a special case we can just drop all the odd terms:

Above each Bi has two separate factors being multiplied onto it; we can multiply those together to make just one factor:

Since we’re only even interested in even values of k we don’t lose generality if we assume that that k=2n for some integer n.

Okay, now we’re getting close to the final form that we’ll code up but first I’ll have to stop and do something distasteful: I’ll change how we denote the values in the Bernoulli series. What you’ve seen up until this point is the modern, standard use of indices: B1 is -1/2, B2 is 1/6, B3 is 0 etc. In Note G, however, Lovelace numbers the values differently. She skips the first two values and the rest are shifted by 1 so that B1 denotes the value we would call B2, B2 is what we would call B3 and so on:

| Value | 1 | -1/2 | 1/6 | 0 | -1/30 | 0 | 1/42 | 0 | … |

| Modern | B0 | B1 | B2 | B3 | B4 | B5 | B6 | B7 | … |

| Note G | not used | B1 | B2 | B3 | B4 | B5 | B6 | … | |

Up until now I’ve been using (what we call) B0 and B1 so it made sense to use the modern convention but now we only have terms left that have a name in Note G, so I’ll switch to her convention. This means that I’ll write the formula as

Note that the meaning of n doesn’t change so the formula is almost the same as it was before, the only difference is the numbering of the Bis.

At this point it’s convenient to give the factors being multiplied onto Bi a name; we’ll call them . So the above is the same as

where

The second one looks hairy but can be boiled down:

The last step is possible because there is, very conveniently, the same number of terms in the numerator and denominator. What’s useful about this form is that it makes it clear that each of the Ais is the previous one with another factor multiplied onto it (except for A0 which is special):

We’re slowly starting to see a program take shape here: if we know the previous Bi we can iteratively calculate the sequence of Ais and multiply them onto those values, ultimately giving us the next Bi. Now we’ll switch gears and code that up as an actual program.

The program

Let’s quickly recap what all the work above gave us. This formula gives us the next Bernoulli number, given the previous values:

where

Say we wanted to write a program that calculated B7 using this approach, that is, the special case where n is 4 (since, as you may vaguely remember, n is the value such that k = 2n – 1).

Let’s take a crack at implementing this in C. We’ll do it twice: first a straightforward implementation that’s quite similar to the Note G program and then we’ll go back over it again and make some modifications to make is (almost) step-by-step identical to Note G.

First, we can assume that the program has been given n and that we have already computed the preceding Bi. We’ll store those in some variables.

double n = 4; double B1 = 0.166667; // 1/6 double B3 = -0.0333333; // -1/30 double B5 = 0.0238095; // 1/42

The general idea will be to keep a variable A that we’ll multiply successive factors onto so it takes the value of , then

, etc. We’ll the multiply those factors onto the previous Bi, which we’ve already been given, and accumulate the result in another variable,

result.

The first term we’ll calculate directly:

double A = 0.5 * (2 * n - 1) / (2 * n + 1); // A0

double result = A;

Then we calculate the second term, , and subtract it from the result:

A = 2 * n / 2; // A1 double term = B1 * A; // B1 A1 result -= term; // A0 - B1 A1

The we calculate by multiplying the appropriate factor onto

:

A *= (2 * n - 1) / 3 * (2 * n - 2) / 4; // A3 term = B3 * A; // B3 A3 result -= term; // A0 - B1 A1 - B3 A3

And for the last term, , we follow exactly the same pattern except that the factor is slightly different:

A *= (2 * n - 3) / 5 * (2 * n - 4) / 6; // A5 term = B5 * A; // B5 A5 result -= term; // A0 - B1 A1 - B3 A3 - B5 A5 printf("The result is: %g\n", result);

If you run this program it will print

The result is: -0.0333333

which is indeed –1/30 also known as B7. This is, in essence, what the Note G program does. It calculates B7 using this sequence of steps. However, if you were to look at Note G now what you’d see would still look a bit foreign. That’s because C let’s us do things that the analytical engine doesn’t. For instance, C lets us write complex expressions where the analytical engine only does one operation at a time. In C we also don’t need to worry about recurring expressions like n * 2 because the compiler will make sure it only gets calculated once. The analytical engine obviously had no compiler so the programmer has to do that kind of optimizations by hand.

To take all this into account I’ll rewind and go over the program again but this time I’ll include all the extra work. In the code comments I’ll give how the variables in my program maps to variables in Note G.

First off we have the values we’re given as input:

one = 1.0; // V1 two = 2.0; // V2 n = 4.0; // V3 B1 = 0.166667; // V21 B3 = -0.0333333; // V22 B5 = 0.0238095; // V23

Note G doesn’t use constant values, only variables. So to use the values 1 and 2 it needs two variables that are pre-initialized with those values. Also, we’ll assume that all variables are pre-declared and start out with the value 0, we won’t bother declaring then anymore.

The first step is to calculate . The C version was:

double A = 0.5 * (2 * n - 1) / (2 * n + 1); // A0

double result = A;

and here is the same thing in analytical-engine-style C:

two_n_minus_one = two_n_plus_one = numerator = two * n; // V4 V5 V6 two_n_minus_one -= one; // V4 two_n_plus_one += one; // V5 A = two_n_minus_one / two_n_plus_one; // V11 A /= two; result += A; // V13 current_n = n - one; // V10

If you compare the two versions it should be clear that they do the same thing except that in the latter you get all the excruciating details of the temporary variables. But it’s still pretty straightforward. Notice in the next to last step how we add A to result before result has ever been assigned – we’re using the fact that variables that haven’t been used yet can be assumed to be 0.

The next part of the C program calculates :

A = 2 * n / 2; double term = B1 * A; // B1 A1 result -= term; // A0 - B1 A1

In analytical-engine-style this corresponds to:

denominator += two; // V7 A = numerator / denominator; term = B1 * A; // V12 result -= term; current_n -= one;

Instead of recalculating 2*n we already have it in a variable, numerator, and will be decrementing it as we go. Similarly with the denominator which we’ll increment as we go rather than recalculate. Notice again how we increment denominator before it has been initialized and rely on it being 0 so it ends up containing 2.

The next step is to calculate :

A *= (2 * n - 1) / 3 * (2 * n - 2) / 4; // A3 term = B3 * A; // B3 A3 result -= term; // A0 - B1 A1 - B3 A3

In analytical engine style we get:

numerator -= one; denominator += one; factor_1 = numerator / denominator; // V8 A *= factor_1; numerator -= one; denominator += one; factor_2 = numerator / denominator; // V9 A *= factor_2; term = B3 * A; result -= term; current_n -= one;

The first two blocks together calculate and the last part subtracts another term from

result. The last part looks very similar:

A *= (2 * n - 3) / 5 * (2 * n - 4) / 6; // A5 term = B5 * A; // B5 A5 result -= term; // A0 - B1 A1 - B3 A3 - B5 A5

In this code we’re using different constants to calculate A than the previous block, and we’re using B5 instead of B3 in the second step. In the analytical-engine-style code we’ve already decremented the variables we’re using to calculate A so that part is exactly the same as for the previous block, but we still need to use B5 instead of B3:

numerator -= one; denominator += one; factor_1 = numerator / denominator; A *= factor_1; numerator -= one; denominator += one; factor_2 = numerator / denominator; A *= factor_2; term = B5 * A; result += term; current_n -= one;

That’s it – the value of B7 is now stored in result. The only thing left to do is to store the value in a dedicated variable and then reset some of the other variables so we’re ready to calculate the next Bi.

B7 += result; // V24 n += one; // Also reset numerator and denominator.

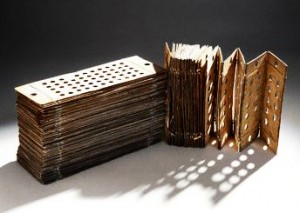

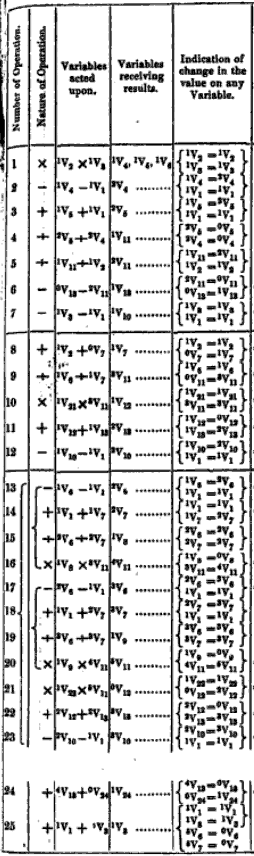

Now, it might look like we’ve just written the world’s most excruciating C program. In fact what’s we’ve done here is step through, instruction-by-instruction, the Bernoulli program in Note G. If you look through that program here on the right you will notice that for each step there is a corresponding line in the analytical-engine-style C program above. (For details on how the table program format works see my post on analytical programming.) Click the program to go to an expanded version.

Now, it might look like we’ve just written the world’s most excruciating C program. In fact what’s we’ve done here is step through, instruction-by-instruction, the Bernoulli program in Note G. If you look through that program here on the right you will notice that for each step there is a corresponding line in the analytical-engine-style C program above. (For details on how the table program format works see my post on analytical programming.) Click the program to go to an expanded version.

Comparing the programs

Now, if you were to go though the program in details you would notice that what I just said isn’t actually true. There are some small differences between the C-style version and what you see in the original notes. Those differences are, I’m afraid, bugs in the original program.

There are three separate issues. The first one is trivial: in step 4 the instruction that should do

A = two_n_minus_one / two_n_plus_one;

is actually switched around and does

A = two_n_plus_one / two_n_minus_one;

That’s a tiny issue that could have been introduced by the printer, and the comment for that step in the table has it the right way round.

Secondly, remember how similar the code for calculating and

was, the only difference being that one used

B3 and the other B5? The second issue is that Note G actually overlooks this difference and where the code for should be simply says to repeat the steps for calculating

. As you’ll see if you click through to the expanded version, in the white space between steps 23 and 24 there is a comment saying “Here follows a repetition of Operations thirteen to twenty-three”.

The third issue is more systematic: the signs are reversed. If you fix the two first issues the result will be correct – except that it has the wrong sign. The sign reversal is also present in the text of the note so I’m not sure if it’s deliberate, but if it is the program calculates something we wouldn’t call the Bernoulli series.

There is also a fourth bonus issue, which is not a bug exactly. At the end in step 25 the code resets some variables such that it’s ready to calculate B9. But if you loop around and run the main program again it won’t give the right result. This is both because some of the variables which the program assumed were 0 on the first run won’t be on the second and because the “loop” after step 23 would need yet another block, this one for calculating . But this is only an issue if you consider the program to calculate the whole Bernoulli sequence rather than just B7.

Now, I want to be sure to put these issues in the right perspective. This is a complex program. I’ve been programming most of my life and it still took me a significant amount of time to understand how it worked. This program was written by a mathematician with no background in programming – they were inventing programming as they went along. On paper. There is an almost frightening intellectual power behind this, both in the program itself and the underlying framework. For me, the fact that we modern programmers, with the benefit of education and experience, can spot a few details out of place is only what you would expect and takes little away from that.

That’s it, you now know how the Note G program works and what each step does. If you’ve found this interesting there’s a good chance you’ll find Lovelace’s original notes interesting too, I recommend reading them. If you want to play around with the program yourself I’ve “ported” it to C; you can find it on github.